[ad_1]

Claims that a chatbot can diagnose medical conditions as accurately as a GP have sparked a row between the software’s creators and UK doctors.

|

|

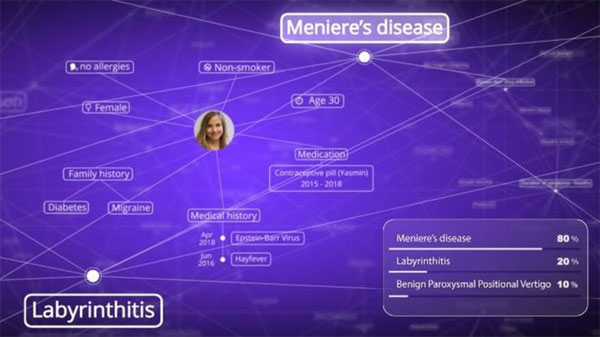

The artificial intelligence software provides what it determines to be the most likely diagnoses — Photo: BABYLON

|

Babylon, the company behind the NHS GP at Hand app, says its follow-up software achieves medical exam scores that are on-par with human doctors.

It revealed the artificial intelligence bot at an event held at the Royal College of Physicians.

But another medical professional body said it doubted the AI’s abilities.

“No app or algorithm will be able to do what a GP does,” said the Royal College of General Practitioners.

“An app might be able to pass an automated clinical knowledge test – but the answer to a clinical scenario isn’t always cut and dried.

“There are many factors to take into account, a great deal of risk to manage, and the emotional impact a diagnosis might have on a patient to consider.”

|

|

The chatbot was unveiled at an event in London

|

But NHS England chairman Sir Malcolm Grant – who attended the unveil – appeared to be more receptive.

“It is difficult to imagine the historical model of a general practitioner, which is after all the foundation stone of the NHS and medicine, not evolving,” he said.

“We are at a tipping point of how we provide care.

“This is why we are paying very close attention to what you’ve been doing and what other companies are doing.”

Higher score

The chatbot AI has been tested on what Babylon said was a representative set of questions from the Membership of the Royal College of General Practitioners exam.

The MRCGP is the final test set for trainee GPs to be accredited by the organisation.

Babylon said that the first time its AI sat the exam, it achieved a score of 81%.

It added that the average mark for human doctors was 72%, based on results logged between 2012 and 2017.

But the RCGP said it had not provided Babylon with the test’s questions and had no way to verify the claim.

“The college examination questions that we actually use aren’t available in the public domain,” added Prof Martin Marshall, one of the RCGP’s vice-chairs.

Babylon said it had used example questions published directly by the college and that some had indeed been made publicly available.

“We would be delighted if they could formally share with us their examination papers so I could replicate the exam exactly. That would be great,” Babylon chief executive Ali Parsa told the BBC.

To further test the AI, Babylon partnered with doctors at two US organisations – Stanford Primary Care and Yale New Haven Health – as well as doctors from the Royal College of Physicians.

It said they had developed 100 real-life scenarios to test the AI.

The company added that it expected its chatbot’s diagnostic skills would further improve as a consequence.

Probable causes

Babylon has demonstrated its chatbot being used as a voice-controlled “skill” on Amazon’s Alexa platform.

While Babylon’s existing GP at Hand service refers users to a human doctor if the app suspects a medical problem, the new chatbot makes a diagnosis itself – offering several possible scenarios along with a percentage-based estimate of each one being correct.

“The suggestion that this can replace doctors is the key issue for us,’ said Prof Marshall.

But Mr Parsa disputed the idea that doctors would be left out in the cold, explaining that the intention was still for a medic to follow up the AI’s diagnoses.

“We are fully aware that an artificial intelligence on its own cannot look after a patient. And that is why we complement it with physicians,” he said.

“It is never going to replace a doctor, but just to help.”

Rwandan connection

Babylon’s stated ambition is to deliver affordable health care to people all over the world.

Since 2016, it has been working in partnership with the government of Rwanda.

The country’s health care service was decimated after the genocide in 1994, in which more than 800,000 people were killed.

Babylon has two million registered users in Rwanda and has conducted tens of thousands of consultations.

Since smartphone use is not widespread in the country, people currently call nurses who follow symptom-checking prompts that appear to them via computer screens.

Information gathered as a result has been used to improve the chatbot.

Source: BBC

[ad_2]

Source link